📘 قراءة مذكّرة فصل الصوت عن الموسيقى أونلاين

كتاب صغير يناقش فصل الصوت عن الموسيقى في اي مقطع فيديو

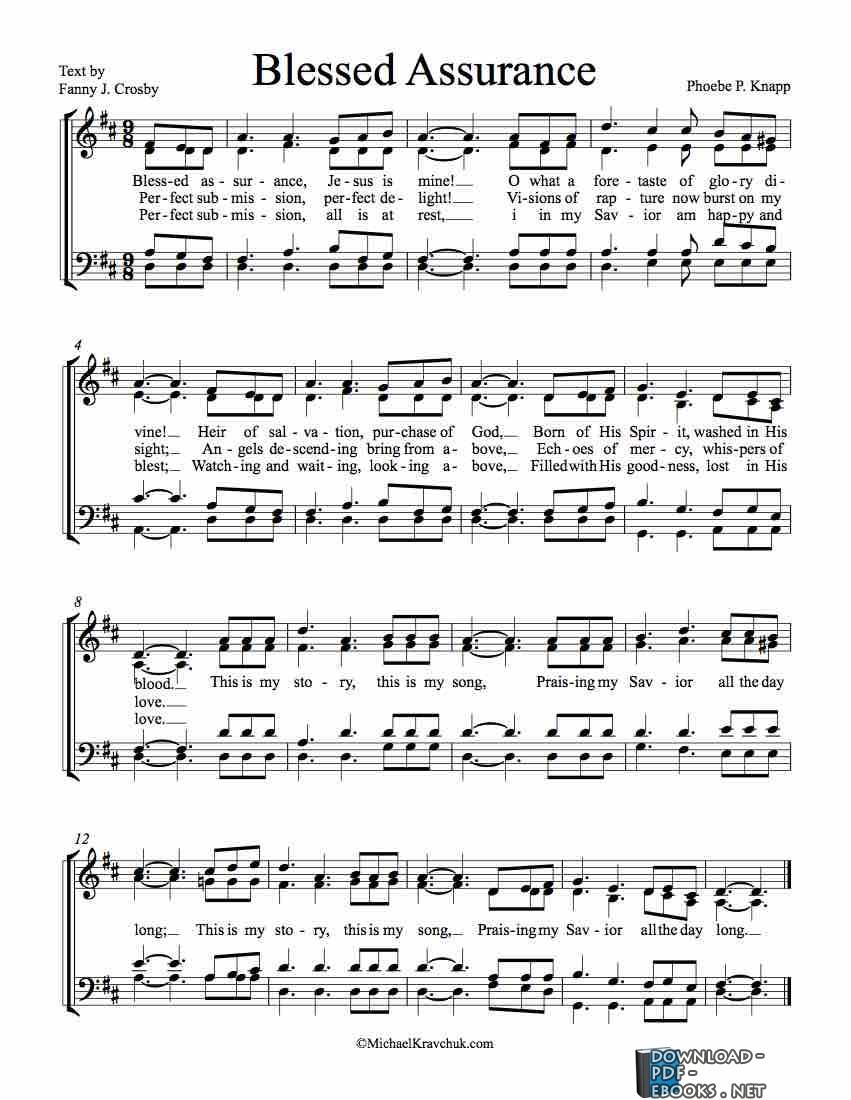

IC Bhiksha Raj, Paris Smaragdis, Madhusudhana Shashanka Mitsubishi Electric Research Labs Cambridge MA 02139 Rita Singh Carnegie Mellon University Pittsburgh PA 15213 ABSTRACT In this paper we present a algorithm for separating singing voices from background music in popular songs. The algorithm is derived by modelling the magnitude spectrogram of audio signals as the outcome of draws from a discrete bi-variate random process that generates time-frequency pairs. The spectrogram of a song is assumed to have been obtained through draws from the distributions underlying the music and the vocals, respectively. The parameters of the underlying distribuiton are learnt from the observed spectrogram of the song. The spectrogram of the separated vocals is then derived by estimating the fraction of draws that were obtained from its distribution. In the paper we present the algorithm within a framework that allows personalization of popular songs, by separating out the vocals, processing them appropriately to one’s own tastes, and remixing them. Our experiments reveal that we are effectively able to separate out the vocals in a song and personalize them to our tastes. Index Terms— Probabilistic Latent Component Decomposition, Signal Separation 1. INTRODUCTION We introduce a framework for personalizing music by changing its inherent characteristics through signal processing. In this framework, pre-recorded music, as exemplified by popular movie songs and independent albums by singers in popular genres worldwide, is first separated into its components, modified automatically and remixed to sound personally pleasing to an individual listener. Our motivation for this was initially to make some extremely high-pitched female vocals produced in Indian movies sound more pleasing by bringing down the pitch of the singer to a softer, more natural level without affecting the overall quality of the song and background music. Note that in making this statement we neither intend to criticize Indian female singers, nor Indian listeners who find high pitched voices pleasing to the ear. We merely bring to attention the well-known fact that music is an acquired taste in human beings, and what may sound pleasing to a group of people may not sound equally pleasing to another group who may have been exposed to different strains of music altogether. We realize that in most cases, these songs are beautiful creations otherwise, and our attempt was initially to merely create the technology that would present this facet of Indian popular music to the world. In retrospect, we found that the uses of such a framework can be numerous, as we will later explain in this paper. To understand how our framework functions, we need to first understand how the majority of studio-recorded studio music is currently produced throughout the world. A good piece of popular music, such as an Indian movie song, is usually a pleasing combination of some background music and one or more foreground singing voices. In a typical production, multiple channels of music and the singer are separately recorded. Individual channels are edited and/or corrected, their relative levels are adjusted, and the signals are mixed down to a small number of channels, typically two. The final sounds we hear are the outcome of this process. The development of our framework begins with addressing the problem of reversal of this process. Given a segment of a song inclusive of vocals and background music, is it possible to separate these components out to extract, say, the singer in isolation? This is the topic we address in this paper. We do not attempt to completely invert the process of mixing to separate the song out into all of the component channels (although such separation is certainly not beyond the scope of the technique presented here); we are content to separate the foreground singer from the background music. The separation of foreground vocals from background musical accompaniment is a non-trivial task that has so far not attracted much attention in the scientific community, although several parallel topics such as automatic transcription of music, separation of musical constituents from an ensemble, and separation of mixed speech signals have all garnered significant attention in recent times. Literature on the topic of separating vocals from background music is relatively sparse. Li and Wang [1] attempt to perform the separation using principles of Computational Auditory Scene Analysis (CASA). In this approach, the pitch of the foreground voice is detected, and spectrotemporal components that are presumed to belong to the voice are identified from the pitch and other auditory principles and grouped together to extract the spectrum (from which, in turn, the signal is extracted) for the voice. Similar CASA-based techniques have also been attempted by Wang [2]. Meron and Hirose [3] attempt to solve the simpler problem of separating background piano sounds from a singing voice. Sinusoidal components are learned for both the piano and the voice from training examples and are used to perform separation using a least-square approach. Alternately, the musical score for the background is used as prior information to enable the separation. Other proposals for separation of music from singing voices have also followed similar approaches, namely those of utilizing either explicitly stated harmonic relationships between spectral peaks, or through prior knowledge obtained from a musical score. The framework described in this paper, on the other hand, does not take any of the approaches mentioned above. Instead, it is built upon a purely statistically driven method, where the song is hypothesized as the combined output of two generative models, one that generates the singing voice and the other the background music. What distinguishes our approach from other statistical methods for signal separation (e.g. [4], [5]) is the nature of the statistical model used. We model individual frequencies as the outcomes of draws from a discrete random process, and magnitude spectra of the signal as the outcome of several draws from this process. The model is perfectly additive in which the spectrogram of a mixed signal is simply modeled as the cumulative histogram of the outcome of draws from the processes underlying each of its constituent signals. The problem of

حجم الكتاب عند التحميل : 1.6 ميجا بايت .

نوع الكتاب : pdf.

عداد القراءة:

اذا اعجبك الكتاب فضلاً اضغط على أعجبني و يمكنك تحميله من هنا:

شكرًا لمساهمتكم

شكراً لمساهمتكم معنا في الإرتقاء بمستوى المكتبة ، يمكنكم االتبليغ عن اخطاء او سوء اختيار للكتب وتصنيفها ومحتواها ، أو كتاب يُمنع نشره ، او محمي بحقوق طبع ونشر ، فضلاً قم بالتبليغ عن الكتاب المُخالف:

قبل تحميل الكتاب ..

قبل تحميل الكتاب ..

يجب ان يتوفر لديكم برنامج تشغيل وقراءة ملفات pdf

يمكن تحميلة من هنا 'http://get.adobe.com/reader/'

منصّة المكتبة

منصّة المكتبة